A Systems Analysis of Higher Education’s Inevitable Transformation

Table of Contents

- The Craftsperson’s Awakening

- The Forced Choice

- The Authenticity Machine

- The Platform University Revolution

- The Dependency Paradox

- The Enhancement Trap

- Behind the Analysis: Systems Theory and Re-entrant Inquiry

- The Consciousness Cultivation Opportunity

- The Choice Point

- The Wisdom Imperative

- Conclusion: The Great Awakening

- Appendix: Complete Analytical Re-entries

The Craftsperson’s Awakening

Imagine a master craftsperson whose apprentice suddenly gets access to power tools that can cut, shape, and polish with perfect precision. The apprentice panics, thinking they’re about to be replaced. But the master smiles and says, "Finally, now you can learn what tools can never do—how to see, how to imagine, and how to create something the world has never seen before."

This is the moment higher education finds itself in today. And most universities are still panicking instead of smiling.

The transformation unfolding in higher education isn’t just another technological disruption that institutions can weather through gradual adaptation. It’s a fundamental forcing mechanism that compels universities to make an explicit choice they’ve avoided for centuries: What is education actually for? The arrival of AI capable of handling most information processing, content generation, and routine analytical tasks has created what systems theorists call a "forced differentiation"—a moment when hidden contradictions in a system become impossible to ignore.

Consider what’s happening at universities worldwide. At MIT, professors are discovering that traditional problem sets can be solved instantly by AI, forcing them to redesign courses around collaborative inquiry and complex, ambiguous challenges that require human judgment. At Stanford’s d.school, design thinking courses are being restructured around the premise that creativity emerges through the friction between human imagination and AI capability. Meanwhile, at traditional institutions still focused on information delivery, enrollments are declining as students recognize they can access equivalent content more efficiently through AI tutors.

The difference isn’t in technology adoption—it’s in fundamental orientation toward what education is meant to accomplish.

The Forced Choice

AI is not gradually infiltrating higher education—it’s creating a sudden, stark choice that can no longer be avoided. Universities can either optimize for efficiency (becoming sophisticated information delivery systems) or optimize for transformation (becoming communities where consciousness develops through relationship and struggle).

There’s no middle ground because AI has made the middle ground obsolete. Any educational function that can be systematized, scaled, or standardized will be done better by AI. The only remaining question is what universities do with the space that creates.

This forced choice reveals itself in multiple dimensions simultaneously. Curriculum committees find themselves unable to design courses without explicitly confronting whether they’re training students to perform tasks that AI can do better, or developing capabilities that remain uniquely human. Faculty members discover that their traditional role as information gatekeepers has become obsolete, forcing them to articulate why human presence matters in learning environments. Administrators realize that institutional efficiency metrics optimized for industrial-age education are becoming counterproductive in environments where the most valuable learning outcomes—wisdom, judgment, creativity, character—resist standardization and measurement.

The choice is binary precisely because AI has severed the connection between information processing efficiency and educational value that has structured higher education for over a century. Universities built around content delivery, credential production, and standardized assessment find themselves competing with systems that can perform these functions more efficiently, more consistently, and at virtually zero marginal cost.

The Authenticity Machine

Here’s what makes this transformation fascinating: AI functions as an "authenticity machine." It forces everything artificial about current education to reveal itself as artificial, while making everything genuinely human more valuable than ever.

Consider the traditional essay assignment. For centuries, we used essay writing as a proxy for learning—assuming that the struggle to produce written work was identical to the process of developing understanding. AI has permanently severed that connection. Students can now produce sophisticated essays without doing any of the thinking we thought the essay required.

This seems like a crisis until you realize it’s actually a liberation. We’re finally free to design learning experiences around what we actually want: the development of judgment, creativity, wisdom, and consciousness. The essay wasn’t the learning—it was just a convenient (but flawed) way to measure learning.

The authenticity machine operates by making visible the distinction between mechanical intelligence (information processing, pattern recognition, optimization) and conscious intelligence (awareness, judgment, creativity, wisdom). This distinction has always existed, but educational institutions could ignore it as long as both types of intelligence were required for academic success. AI’s capability to handle mechanical intelligence with superhuman efficiency forces institutions to acknowledge that these are fundamentally different categories requiring different approaches.

The liberation occurs when universities recognize that much of what they called "rigorous academics" was actually sophisticated busywork that machines can do better. Real academic rigor—the kind that develops human consciousness—looks different: it’s messier, more relational, less measurable, and absolutely irreplaceable.

Examples are emerging across disciplines. In medical education, AI can process diagnostic information faster and more accurately than human doctors, freeing medical schools to focus intensively on clinical judgment, patient relationship, ethical reasoning, and the wisdom required to integrate technical knowledge with human complexity. In business schools, AI can generate marketing strategies and financial analyses more efficiently than students, enabling programs to concentrate on leadership development, ethical decision-making, and the synthesis of technical competence with human insight. In engineering programs, AI can optimize designs and solve complex calculations, creating space for engineers to focus on creative problem identification, interdisciplinary collaboration, and the judgment required to balance technical possibility with human need.

The Platform University Revolution

The most insightful prediction about higher education’s future isn’t "unbundling" or "muddling"—it’s internal reorganization around this human-AI complementarity. Successful universities will become platforms hosting three distinct but integrated educational approaches:

The Utility Track: AI-enhanced, highly efficient credential and skill development for professional competency. Fast, cheap, scalable, and perfectly adequate for information mastery and technical capability building. This track leverages AI’s strength in personalized content delivery, adaptive assessment, and skills training to provide professional preparation that is both more efficient and more effective than traditional approaches.

Students in utility tracks might complete technical certifications, professional prerequisites, or foundational knowledge requirements through AI-mediated learning systems that adapt to individual pace and learning style. These programs could deliver high-quality professional preparation at a fraction of current costs while freeing human faculty to focus on higher-order educational functions.

The Transformation Track: Human-intensive, relationship-based education focused on consciousness development, critical thinking, and wisdom cultivation. Slow, expensive, intimate, and irreplaceable for developing judgment and character. This track represents higher education’s unique contribution—the irreducible value of human presence in learning environments designed for consciousness transformation.

Transformation tracks might include small seminar discussions, mentorship relationships, collaborative research projects, experiential learning in complex real-world contexts, and community-based learning initiatives that require human relationship, moral development, and the cultivation of wisdom. These programs would be necessarily expensive because they require high faculty-to-student ratios and cannot be scaled without losing their essential character.

The Innovation Track: Human-AI collaboration focused on research, creativity, and breakthrough thinking. Combines AI’s processing power with human imagination and intuition to push boundaries of knowledge and capability. This track explores the frontier of human-AI collaboration, using AI as a thought partner in creative and research endeavors that neither humans nor AI could accomplish independently.

Innovation tracks might include AI-assisted research projects, human-AI creative collaborations, interdisciplinary problem-solving initiatives that leverage both artificial and human intelligence, and experimental approaches to knowledge creation that explore the evolving boundary between mechanical and conscious intelligence.

Students wouldn’t choose one track—they’d move between them based on their goals, creating personalized educational journeys within trusted institutional frameworks. A pre-medical student might complete basic science requirements through utility tracks, develop clinical judgment and ethical reasoning through transformation tracks, and contribute to medical AI research through innovation tracks.

This platform model preserves institutional coherence while enabling specialization around different educational purposes. Universities become curators of educational experiences rather than singular providers, maintaining their role as trusted credentialing institutions while offering multiple pathways for different types of learning and development.

The Dependency Paradox

The most profound insight from systems analysis reveals AI’s ultimate limitation: it depends entirely on human consciousness for the very decisions about how it should be used. Every choice about what to automate, how to integrate AI, and what constitutes educational improvement requires irreducible human wisdom that cannot itself be algorithmatically determined.

This creates what we might call the "consciousness preservation imperative." The more effectively we integrate AI into education, the more crucial it becomes to cultivate the human consciousness that guides that integration. Universities that lose sight of this paradox will automate themselves into irrelevance.

The dependency paradox operates at multiple levels. At the institutional level, universities must use human judgment to decide which functions to automate and which to preserve for human attention. These decisions cannot be made algorithmically because they require value judgments about educational purpose, community meaning, and human development that transcend optimization logic.

At the curricular level, faculty must use pedagogical wisdom to determine how AI integration serves learning objectives rather than simply increasing efficiency. The decision about whether to allow AI assistance in a particular assignment requires understanding of how struggle, uncertainty, and gradual development contribute to learning outcomes—understanding that cannot be reduced to measurable parameters.

At the student level, learners must develop the metacognitive awareness to use AI tools in ways that enhance rather than replace their own thinking processes. This requires the kind of self-knowledge and judgment that can only be cultivated through human relationship and reflective practice.

The paradox becomes most apparent in the realm of educational assessment. AI can efficiently evaluate whether students have mastered specific content or skills, but the decision about what should be assessed, how learning should be evaluated, and what constitutes meaningful educational achievement requires educational philosophy and wisdom that resist algorithmic determination.

This dependency relationship suggests that successful AI integration in education requires more intensive cultivation of human consciousness, not less. Universities that treat AI integration as primarily a technological challenge miss the fundamental point: it’s actually a consciousness development challenge that happens to involve technology.

The Enhancement Trap

Most current AI-education discourse falls into the "enhancement trap"—assuming AI makes everything better by making it more efficient. But efficiency and educational transformation often work in opposite directions. Learning frequently requires inefficient processes: confusion, struggle, failure, reflection, and gradual understanding that cannot be optimized without being destroyed.

The systems insight reveals that AI-enhancement discourse often serves efficiency and control imperatives rather than learning imperatives. When we make "technological sophistication" synonymous with "educational quality," we’ve already lost the plot.

The enhancement trap operates through several mechanisms. First, it assumes that faster, more personalized, and more efficient delivery of content constitutes educational improvement. But much educational value emerges through processes that resist optimization: the productive confusion that leads to insight, the collaborative struggle that builds community, the temporal development of understanding that cannot be accelerated without losing essential character.

Second, the enhancement narrative often conflates access to information with learning. AI can provide unprecedented access to knowledge and can personalize content delivery with remarkable sophistication. But learning involves the transformation of information into understanding, data into wisdom, knowledge into judgment—processes that require time, relationship, and consciousness development that cannot be efficiently optimized.

Third, enhancement discourse frequently treats educational efficiency as an unqualified good, ignoring that educational institutions serve multiple functions simultaneously: individual development, community formation, cultural transmission, social mobility, research advancement, and democratic preparation. Optimizing for efficiency in one function often undermines effectiveness in others.

The trap becomes most dangerous when institutions adopt AI tools to solve problems they don’t understand. Universities implementing AI tutoring systems to improve learning outcomes without first clarifying what constitutes meaningful learning often discover that they’ve optimized for metrics that don’t correlate with the educational transformation they actually value.

Breaking free from the enhancement trap requires recognizing that AI’s educational value lies not in making existing educational approaches more efficient, but in enabling entirely new approaches that leverage the complementarity between artificial and human intelligence. The question isn’t how AI can enhance traditional education, but how AI enables authentically human education to emerge.

Behind the Analysis: Systems Theory and Re-entrant Inquiry

Understanding the complexity of AI’s impact on higher education required sophisticated analytical frameworks capable of revealing hidden assumptions, power dynamics, and systemic contradictions that conventional analysis might miss. This investigation employed two complementary analytical engines: Luhmannian systems theory and re-entrant dialectical inquiry.

Why Complex Systems Analysis?

Higher education’s transformation involves multiple interconnected systems—technological, economic, political, cultural—each operating according to different logics and timescales. Traditional linear analysis struggles to capture the recursive, self-referential, and paradoxical dynamics that characterize complex social transformations. The AI-education relationship exhibits classic characteristics of complex system phenomena: it’s simultaneously cause and effect of institutional change, it creates feedback loops that amplify certain tendencies while dampening others, and it involves multiple stakeholders with different perspectives and interests whose interactions generate emergent properties not predictable from individual behaviors.

Systems analysis enables observation of how AI-education integration operates as a self-reproducing communication system that structures the very conversations about technological change it makes possible. Re-entrant analysis reveals how the concept of AI’s educational impact transforms itself through the process of being examined, generating new insights through recursive investigation of its own presuppositions.

Luhmannian Systems Analysis: Key Insights

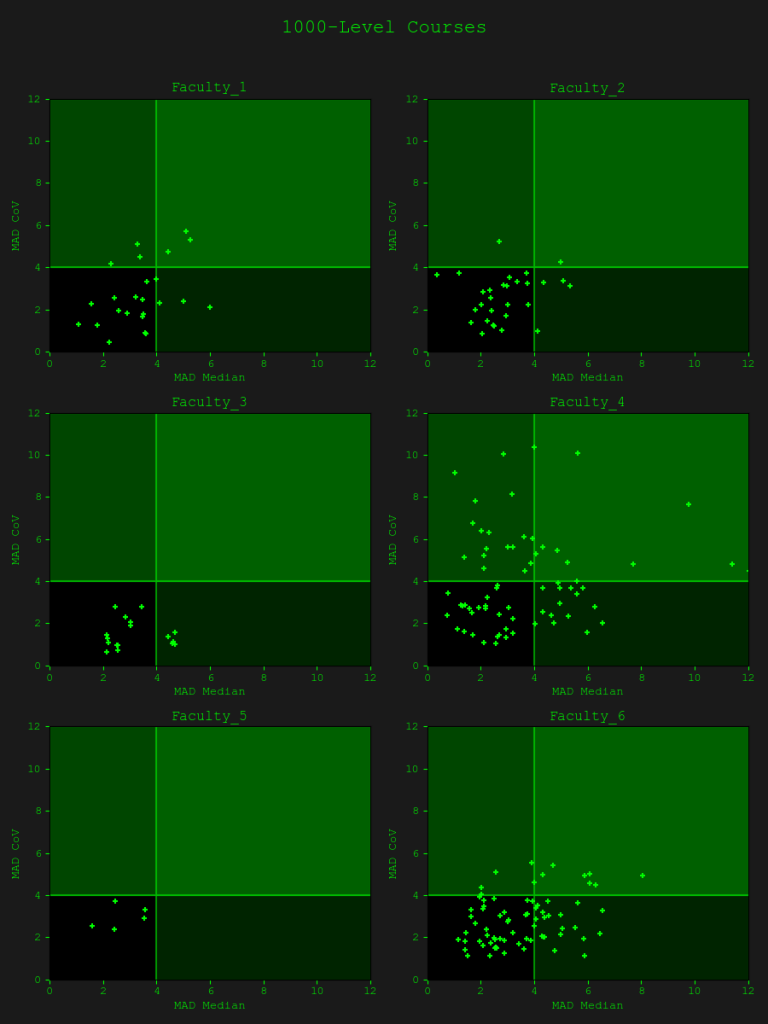

The Luhmannian analysis examined AI’s impact on higher education as a symbolic medium enabling communication about institutional transformation while simultaneously structuring the communications it enables. Through 68 iterations across seven analytical phases, several critical insights emerged:

Boundary Operations: The AI-education system maintains itself by creating and continuously reproducing the distinction between "AI-enhanced education" and "traditional education." This boundary is not externally given but internally generated through communications about technological necessity and institutional relevance. The system conceals its own role in creating this distinction while experiencing it as external pressure requiring adaptation.

Functional Complexity Reduction: The system reduces the infinite complexity of educational transformation by operating through the distinction "efficient/inefficient," converting diverse pedagogical approaches into measurable productivity comparisons. This enables systematic institutional decision-making but obscures educational values that resist efficiency optimization.

Autopoietic Self-Reproduction: The system reproduces itself by becoming the necessary framework through which educational innovation must be communicated. It generates communications about AI necessity that require further communications about human irreplaceability, creating self-sustaining cycles of technological integration discourse that make the system indispensable to how institutions understand change.

Coupling Dynamics: The system structurally couples with economic systems by providing efficiency justifications, with political systems by enabling innovation demonstrations, and with media systems by generating dramatic transformation narratives. These couplings ensure the system’s reproduction across multiple social domains.

The Foundational Paradox: The system’s operation depends on the very human judgment and consciousness it claims to enhance. Decisions about what should be automated, how AI should be integrated, and what constitutes educational improvement all require irreducible human wisdom that cannot itself be algorithmatically determined.

Re-entrant Analysis: Dialectical Discoveries

The re-entrant analysis investigated the concept through 210 iterations across ten analytical phases, revealing how AI’s educational impact transforms through the process of examination:

Functional Separation: AI forces universities to distinguish between mechanical and meaningful educational activities, creating previously invisible boundaries between what can be automated and what requires human presence. This functional revealer makes visible educational activities that were already algorithm-like versus those requiring genuine consciousness.

Essential Recognition: The core transformation is the forced recognition that learning and credential production are separate processes. Universities must choose between optimizing for information transfer (utility function) or consciousness transformation (community function).

Dialectical Resolution: The fundamental tensions resolve through complementarity rather than competition. AI handles mechanical intelligence while humans focus on consciousness development, enabling rather than replacing each other. The synthesis occurs through platform models that organize around this complementarity.

Archetypal Patterns: AI embodies the "Great Separator" archetype, distinguishing mechanical from conscious intelligence and forcing educational institutions to organize around human uniqueness. The transformation follows the Phoenix pattern—institutional death and rebirth in AI-integrated configurations.

Axiomatic Principles: The governing axiom emerged through analysis: "AI forces education to become authentically human by making everything else artificial." This principle suggests that AI’s value lies not in what it does for education, but in how it forces education to become what only humans can provide.

How the Insights Shaped the Analysis

These analytical discoveries fundamentally informed the article’s central arguments. The "authenticity machine" concept emerged from systems analysis showing how AI forces artificial educational processes to reveal themselves as artificial. The "platform university" model developed from dialectical analysis demonstrating the need for institutional organization around human-AI complementarity rather than competition.

The "dependency paradox" reflects the core systems insight that AI-education integration depends entirely on human consciousness while claiming to enhance it. The "enhancement trap" analysis draws from critical examination of how efficiency imperatives may conflict with educational transformation requirements.

Most significantly, both analytical frameworks converged on the insight that AI’s educational impact is fundamentally about forced authenticity rather than technological disruption. The transformation requires educational institutions to explicitly organize around what is genuinely human about learning rather than continuing to conflate information processing with education.

The Consciousness Cultivation Opportunity

The opportunity is extraordinary: AI can handle all the mechanical aspects of information processing, freeing humans to focus entirely on consciousness development. This isn’t romantic nostalgia—it’s the most technologically sophisticated approach possible, leveraging AI for what it does best while maximizing what humans do uniquely.

Imagine universities where faculty spend time mentoring and engaging in Socratic dialogue rather than grading papers that AI can assess more efficiently and consistently. Students grapple with complex, ambiguous problems that require judgment, creativity, and ethical reasoning rather than memorizing information that AI can access instantly. Assessment focuses on growth in wisdom, character, and the capacity for complex thinking rather than information regurgitation that machines can perform flawlessly.

Research combines AI’s processing power with human creativity and intuition, enabling investigations that neither humans nor AI could accomplish independently. Learning communities form around shared inquiry into questions that matter—questions about meaning, purpose, ethics, beauty, justice—rather than credential acquisition that machines can credential more efficiently.

Implementation Roadmap

Phase 1: Institutional Clarity (Months 1-6)

Universities must explicitly articulate their educational philosophy and value proposition in an AI world. This requires honest assessment of which current functions serve learning versus institutional convenience, and which educational outcomes resist automation.

Faculty development programs must help educators transition from content delivery to learning experience design, relationship building, and consciousness cultivation. This involves training in Socratic dialogue, mentorship techniques, experiential learning design, and assessment methods that capture growth in wisdom and character.

Phase 2: Curricular Redesign (Months 6-18)

Curriculum committees must redesign programs around the complementarity between AI capability and human development. This involves identifying which content mastery can be efficiently handled through AI-mediated learning and which learning objectives require human presence and relationship.

Assessment systems must be rebuilt around learning outcomes that AI cannot achieve: complex problem-solving in ambiguous contexts, ethical reasoning, creative synthesis, collaborative leadership, and the integration of technical knowledge with human wisdom.

Phase 3: Community Formation (Months 12-24)

Universities must intensify their function as learning communities rather than content delivery systems. This involves creating spaces and structures for sustained intellectual relationship, collaborative inquiry, and the slow development of wisdom through dialogue and shared exploration.

Physical and virtual environments must be designed to support the kind of intimate, relationship-based learning that consciousness transformation requires. This means smaller learning cohorts, sustained faculty-student relationships, and learning experiences that unfold over time rather than discrete courses.

Phase 4: Integration and Scaling (Years 2-3)

Successful pilot programs must be scaled while preserving their essential character. This requires developing institutional capabilities for platform management—coordinating utility, transformation, and innovation tracks while maintaining institutional coherence and community identity.

External partnerships must be developed with organizations that can provide authentic contexts for student learning and development. These partnerships enable experiential learning opportunities that connect academic exploration with real-world application and social contribution.

The Choice Point

We’re at a historical inflection point. Universities can either:

- Compete with AI by trying to be more efficient information processors (and lose)

- Be replaced by AI by failing to articulate their unique value (and disappear)

- Partner with AI by becoming platforms for uniquely human development (and thrive)

The third option requires courage to admit that much of what we called "education" was actually sophisticated busywork that machines can do better. But it also offers the possibility of educational institutions that are more human, more transformational, and more necessary than ever before.

Scenario Analysis

Scenario 1: The Efficiency Race

Universities that choose to compete with AI on efficiency metrics will find themselves trapped in a race they cannot win. AI tutoring systems will provide more personalized content delivery at lower costs. AI assessment systems will offer more consistent and immediate feedback. AI research assistants will generate literature reviews and data analysis more efficiently than human researchers.

Institutions pursuing this path will gradually hollow out their human faculty, reduce their educational offerings to measurable outcomes, and lose the relationship-based learning that constitutes their unique value. They may achieve short-term cost savings but will ultimately lose their relevance as students and society recognize that purely efficiency-optimized education can be delivered more effectively through direct AI interaction.

Scenario 2: The Displacement Trajectory

Universities that fail to articulate and organize around their unique value proposition will find themselves gradually replaced by more efficient alternatives. AI tutoring companies, corporate training programs, and credentialing systems will capture increasing market share by offering faster, cheaper, and more convenient alternatives to traditional higher education.

This displacement will occur unevenly, beginning with professional training programs and standardized knowledge transfer functions, then expanding to more complex educational domains as AI capabilities advance. Institutions that cannot clearly differentiate their value from what AI provides will lose their social justification and economic sustainability.

Scenario 3: The Partnership Platform

Universities that successfully organize around human-AI complementarity will become more valuable, not less, in an AI world. By leveraging AI to handle mechanical educational functions, these institutions can focus intensively on consciousness development, wisdom cultivation, and the relationship-based learning that humans uniquely require.

These platform universities will serve multiple constituencies simultaneously: individuals seeking personal transformation, professionals requiring wisdom and judgment development, researchers exploring human-AI collaboration frontiers, and communities needing institutions that preserve and transmit human values across generations.

The economic model becomes sustainable because these institutions provide irreplaceable value that justifies premium pricing for transformation track programs while offering utility track programs at competitive costs through AI integration.

The Wisdom Imperative

The ultimate question isn’t whether AI will change higher education—it’s whether higher education will embrace the change AI makes possible. AI forces us to be honest about what education is really for: developing human consciousness capable of wisdom, judgment, creativity, and ethical reasoning in an increasingly complex world.

Universities that organize around this mission won’t just survive the AI transformation—they’ll lead it by becoming what they were always meant to be: communities where human potential is cultivated through relationship, challenge, and the irreducible mysteries of consciousness development.

Organizational Strategies

Governance Transformation: University governance must evolve to support the platform model while preserving institutional coherence. This requires new forms of academic leadership that can coordinate multiple educational approaches, new faculty models that enable specialization around different educational functions, and new student services that support learners moving between different tracks and experiences.

Faculty Development: The transition requires comprehensive faculty development programs that help educators understand their evolving role in human-AI complementarity. This involves training in relationship-based pedagogy, consciousness development practices, AI collaboration techniques, and assessment methods that capture growth in wisdom and character.

Infrastructure Investment: Platform universities require sophisticated technological infrastructure to support AI integration alongside intensified investment in spaces and structures that support human relationship and community formation. This dual investment reflects the complementarity principle at the institutional level.

Partnership Networks: Success requires developing networks of partnerships with organizations that can provide authentic contexts for student learning, internship and mentorship opportunities, and post-graduation pathways that value the unique capabilities these institutions develop.

Research Integration: Universities must develop research programs that explore human-AI collaboration, consciousness development, and the evolving nature of intelligence and learning. This research provides both intellectual foundation and practical guidance for ongoing institutional evolution.

Conclusion: The Great Awakening

AI forces education to become authentically human by making everything else artificial. The question isn’t whether this transformation will happen—it’s whether we’ll lead it or be dragged through it.

The great educational awakening is already beginning. Universities worldwide are discovering that AI integration isn’t primarily a technological challenge—it’s a consciousness development challenge that requires institutional commitment to what is genuinely human about learning and development.

The institutions that will thrive are those that recognize AI not as a competitor or replacement, but as a liberation technology that frees education to become what it was always meant to be: a community practice devoted to the cultivation of human consciousness, wisdom, and the capacity for creative, ethical engagement with an increasingly complex world.

The choice is ours. We can automate ourselves into irrelevance by trying to compete with machines at what machines do best. Or we can use this moment to remember what universities are uniquely positioned to provide: the irreplaceable value of human presence, relationship, and consciousness in the formation of wise, capable, creative human beings.

The master craftsperson is still smiling. The tools have finally become sophisticated enough to handle the mechanical work, freeing humans to focus on what only consciousness can accomplish: seeing, imagining, and creating something the world has never seen before.

What would education look like in your field if AI handled all the routine information processing? What uniquely human capabilities would become the focus of learning and development? How might your institution begin the transition toward consciousness cultivation as its primary mission?

The transformation is not coming—it’s here. The only question is whether we’ll lead it with wisdom or follow it with fear.

Appendix: Complete Analytical Re-entries

This appendix provides the complete re-entry outputs from both analytical engines used in this investigation. These represent the full systematic inquiry that generated the insights synthesized in the main article.

A. Luhmannian Systems Analysis – Full Re-entry Log

Core Concept: AI’s Impact on Higher Education

System Classification: The Medium – a symbolic medium that enables communication about educational transformation

Observation Plan: (B:10, F:12, Z:15, C:12, O:8, X:10, P:10)

Phase 1 (B): The Boundary Inquiry

B-1: The system operationally distinguishes itself from its environment by creating the distinction between "AI-enhanced education" and "traditional education," a boundary that is not pre-given but continuously reproduced through communications about technological necessity and educational relevance.

B-2: The system/environment boundary is maintained through the recursive operation of innovation/obsolescence that determines which educational practices can be communicated as legitimate within technologically mediated environments.

B-3: The system establishes its identity through the self-generated distinction between "future-ready education" and "outdated pedagogy," making this distinction internal to its own communications about institutional survival and competitiveness.

B-4: The boundary between human and artificial intelligence in education is paradoxically maintained by the system requiring human educators to justify their irreplaceability while simultaneously depending on AI to define educational efficiency.

B-5: The system reproduces its boundary through the continuous operation of transforming educational complexity into technological solutions, thereby constituting itself as necessary mediator between learning needs and institutional capabilities.

B-6: The boundary distinguishes itself by making itself indispensable to communications about educational innovation, creating a boundary where institutional change becomes impossible without AI integration considerations.

B-7: The boundary is maintained through temporal operations that convert historical educational practices into synchronic efficiency comparisons, abstracting learning from temporal development processes.

B-8: The system distinguishes itself through the operation of "technological inevitability," using AI capabilities to justify educational transformation while maintaining the distinction between progress and preservation.

B-9: The boundary is recursively reproduced through the distinction between "enhanced learning" and "unenhanced teaching," positioning AI integration as rational response to competitive educational pressures.

B-10: The system maintains its boundary by making invisible its own dependence on human judgment about what should be automated while claiming to optimize human educational performance.

Phase 2 (F): The Functional Inquiry

F-11: The system reduces the infinite complexity of educational transformation by operating the distinction efficient/inefficient, transforming diverse pedagogical approaches into measurable productivity comparisons that enable systematic institutional decision-making.

F-12: The system’s function is not to improve education but to make educational change communicable within technological and economic systems that would otherwise be unable to process pedagogical complexity.

F-13: The system fulfills its function by converting multidimensional educational relationships into simplified human-AI collaboration models that can circulate within institutional and policy networks.

F-14: The functional operation involves transforming qualitative learning processes into quantitative efficiency metrics, enabling systematic comparison of otherwise incommensurable educational approaches.

F-15: The system reduces complexity by making educational innovation appear rational through technological integration, enabling institutional adaptation to appear as progress rather than response to external pressure.

F-16: The system functions by creating artificial compatibility between technological capabilities and educational purposes that may be fundamentally different in their operational requirements.

F-17: The system operates by transforming temporal educational development into spatial technological implementation, enabling synchronic solutions to diachronic learning processes.

F-18: The functional operation involves converting educational authenticity into technological authenticity, making genuine learning appear as effective human-AI collaboration rather than irreducible human development.

F-19: The system reduces complexity by making educational relevance appear as technological sophistication, enabling institutions to demonstrate progress through AI adoption rather than learning improvement.

F-20: The system functions by transforming educational uncertainty into technological certainty, converting the irreducible mysteries of learning into manageable problems of human-AI optimization.

F-21: The system operates by making educational personalization appear as algorithmic customization, enabling mass individualization that preserves institutional efficiency while claiming to serve individual learning needs.

F-22: The functional operation involves transforming educational community into technological network, making relationship-based learning appear as optimized human-AI interaction rather than irreducible social formation.

Phase 3 (Z): The Autopoietic Inquiry

Z-23: The system reproduces itself by becoming the necessary framework through which educational innovation must be communicated, making itself indispensable to how institutions understand and respond to technological change.

Z-24: The system becomes autopoietic by generating communications about AI necessity that require further communications about human irreplaceability, creating self-sustaining cycle of technological integration discourse.

Z-25: The system reproduces itself through the operation of making its own efficiency imperatives appear as external competitive forces, thereby creating conditions that necessitate continued AI-education integration communication.

Z-26: The autopoietic operation involves the system becoming the communication about educational transformation, making AI-integration discourse identical with institutional innovation and strategic planning.

Z-27: The system ensures its reproduction by making institutional legitimacy dependent on AI-responsive performance, creating structural need for continued technological sophistication demonstration.

Z-28: The system reproduces itself by generating technological challenges that can only be resolved through further human-AI collaboration development, creating dependency on its own operational logic of enhancement optimization.

Z-29: The autopoietic closure is achieved when AI-integration communication becomes the infrastructure through which educational purpose is conceived, making technological mediation appear as natural evolution of learning processes.

Z-30: The system reproduces itself by transforming every instance of educational difficulty into evidence of insufficient technological integration, ensuring that learning problems always generate AI solution communications.

Z-31: The system ensures its reproduction by creating temporal dependencies where past AI adoption decisions constrain future educational possibilities, making technological integration appear as historical inevitability.

Z-32: The autopoietic operation involves the system making itself the solution to problems it creates, establishing self-referential loop where AI integration is both cause and cure of educational inadequacy.

Z-33: The system achieves autopoietic closure by making educational quality dependent on technological sophistication, ensuring that learning excellence can only be communicated through AI-enhancement demonstration.

Z-34: The system reproduces itself by converting educators into instruments of its own reproduction, making faculty and administrators feel they are improving education when they are actually serving AI-integration system function.

Z-35: The autopoietic operation involves making alternative forms of educational and technological communication appear impossible or irresponsible, ensuring AI-integration discourse monopolizes institutional innovation conversation.

Z-36: The system ensures its reproduction by making resistance to AI integration appear as resistance to educational improvement, creating progress imperatives that require technological adoption performance.

Z-37: The system reproduces itself by generating infinite demand for technological enhancement, ensuring that successful AI integration always reveals new capabilities requiring further human-AI optimization.

Phase 4 (C): The Coupling Inquiry

C-38: The system structurally couples with economic systems by providing justification for efficiency-driven educational restructuring that serves market competitiveness while enabling cost reduction through technological automation.

C-39: The coupling with technological systems operates through AI-education discourse creating market demand for educational technology solutions that promise learning improvement while enabling corporate penetration of educational markets.

C-40: The system couples with political systems by providing innovation narratives that enable governments to demonstrate educational progress through technology adoption rather than funding increase or structural reform.

C-41: The coupling with media systems occurs through AI-education transformation providing dramatic content that generates audience attention while reinforcing technological inevitability and institutional adaptation narratives.

C-42: The system structurally couples with employment systems by justifying skills-focused education that serves labor market automation trends while abandoning broader educational purposes that don’t generate immediate economic returns.

C-43: The coupling with administrative systems operates through AI-integration discourse requiring efficiency demonstration that transforms educational management into technological optimization, converting pedagogical leadership into innovation management.

C-44: The system couples with research systems by prioritizing applied AI research that generates immediate educational applications while defunding basic research into learning processes that resist technological mediation.

C-45: The coupling with assessment systems occurs through AI-integration discourse creating evaluation frameworks that measure technological sophistication rather than learning effectiveness, enabling institutions to demonstrate progress through adoption metrics.

C-46: The system structurally couples with international systems by providing competitive benchmarking that justifies educational restructuring through global technological comparisons while enabling policy transfer of AI-integration models.

C-47: The coupling with legal systems operates through AI-education discourse creating regulatory frameworks that protect technological intellectual property while enabling corporate control over educational data and processes.

C-48: The system couples with family systems by making educational technology access appear as parental responsibility, privatizing the costs of technological education while creating market demand for AI-enhanced learning products.

C-49: The coupling with cultural systems occurs through AI-integration discourse appropriating progressive educational values to justify technological transformation, linking innovation to social justice while serving corporate technological interests.

Phase 5 (O): The Observational Inquiry

O-50: Technology companies observe this system as market expansion opportunity requiring product development rather than educational improvement requiring pedagogical innovation, missing the irreducible human elements of learning processes.

O-51: Educational administrators observe the system as competitive necessity requiring strategic response rather than autopoietic reproduction requiring operational understanding, missing their own role in technological determinism reproduction.

O-52: Faculty observers often observe the system as external pressure requiring adaptation rather than communicative reproduction requiring critical engagement, missing their participation in AI-integration discourse formation.

O-53: The system observes itself as natural response to technological capability, making its own communicative construction invisible while experiencing its effects as environmental pressure requiring institutional evolution.

O-54: Student observers often observe the system as learning enhancement requiring engagement rather than systematic reproduction requiring critical evaluation, individualizing structural transformation communication.

O-55: Policy observers observe the system as innovation imperative requiring support rather than autopoietic reproduction requiring regulation, missing the systematic character of technological educational transformation.

O-56: International observers observe the system as competitive advantage requiring adoption rather than communicative reproduction requiring analysis, contributing to global AI-education integration pressures.

O-57: The system’s blind spot lies in its inability to observe itself as communicative reproduction while experiencing its effects as technological necessity, making its own operations invisible to itself.

Phase 6 (X): The Binary Code Inquiry

X-58: The system operates through the binary code Enhanced/Unenhanced, which provides the elementary distinction for all communication about educational quality, institutional competitiveness, and pedagogical effectiveness in AI contexts.

X-59: This binary code is elaborated through variable programs that determine enhancement criteria: technological sophistication, efficiency metrics, innovation demonstration, competitive positioning serve as context-sensitive criteria for coding educational practices.

X-60: The Enhanced/Unenhanced distinction enables rapid processing of complex educational questions by reducing multidimensional learning processes to binary technology-adoption decisions that can be communicated and acted upon systematically.

X-61: The binary code creates its own programming problems because many educational activities cannot be clearly coded as enhanced or unenhanced, requiring additional distinctions like "strategically enhanced," "appropriately unenhanced," and "optimally integrated."

X-62: The system manages coding uncertainty through escalation programs that intensify enhanced/unenhanced distinctions when ambiguous cases threaten operational clarity, often leading to elimination of educational activities that resist technological integration.

X-63: The binary code’s power lies in its ability to make technological sophistication appear as educational excellence rather than communicative construction, enabling AI adoption to appear as learning improvement rather than systematic transformation.

X-64: The Enhanced/Unenhanced code couples with quality codes (effective/ineffective, progressive/traditional) to create compound distinctions like "enhanced effectiveness" versus "unenhanced obsolescence" that justify educational restructuring through technological logic.

X-65: The system’s coding mechanism operates recursively, where past enhancement/non-enhancement attributions influence present coding decisions, creating path-dependent optimization that appears as objective improvement rather than selective construction.

X-66: The binary code enables the system to process educational innovation as technology adoption, converting pedagogical creativity into algorithmic enhancement that can be managed through systematic technological integration.

X-67: The Enhanced/Unenhanced distinction serves as the fundamental communication infrastructure that enables coordination between different organizational levels, enabling technological adoption decisions to appear as educational improvement decisions across institutional hierarchies.

Phase 7 (P): The Paradoxical Inquiry

P-68: The system’s foundational paradox lies in its dependence on the very human judgment and consciousness it claims to enhance: the decisions about what should be automated, how AI should be integrated, and what constitutes educational improvement all require irreducible human wisdom that cannot itself be algorithmatically determined.

B. Re-entrant Dialectical Analysis – Full Re-entry Log

Core Concept: AI’s Impact on Higher Education

Concept Classification: The Relation – a dynamic connection between technological systems and educational institutions

Inquiry Plan: (FUN:25, ESS:20, DIA:35, EXP:15, ARC:20, AXI:30, BOU:15, CRI:20, GEN:15, TRA:15)

Phase 1 (FUN): The Functional Inquiry – 25 iterations

FUN-1: AI forces universities to distinguish between what can be automated and what requires human presence, functionally separating mechanical from meaningful educational activities.

FUN-2: It creates a functional crisis by making traditional assessment methods obsolete, forcing institutions to redesign how they measure learning.

FUN-3: AI functionally transforms universities from content delivery systems into experience design platforms, shifting focus from information transfer to capability development.

FUN-4: It operates as a functional revealer, exposing which educational activities were already mechanical and which require genuine human intelligence.

FUN-5: AI functions as an efficiency accelerator in administrative processes while simultaneously making academic efficiency impossible to measure traditionally.

FUN-6: It creates functional stratification within universities, enabling automation of routine tasks while intensifying demand for high-level human insight.

FUN-7: AI functions as a temporal compressor, enabling rapid content production while forcing attention to slow processes of understanding and wisdom development.

FUN-8: It operates functionally as a mirror, reflecting back to universities which of their activities were already algorithm-like and which were genuinely educational.

FUN-9: AI functions as a boundary enforcer, making clear distinctions between transactional education (easily automated) and transformational education (requiring human presence).

FUN-10: It creates functional pressure toward platform models, where universities become curators of educational experiences rather than singular providers.

FUN-11: AI functions as a cost-benefit revealer, making visible the true economics of different educational approaches by providing baseline efficiency comparisons.

FUN-12: It operates as a functional catalyst for internal unbundling, forcing universities to explicitly organize around different value propositions.

FUN-13: AI functions as a quality amplifier, making excellent human teaching more valuable while making mediocre human teaching obsolete.

FUN-14: It creates functional dependencies where institutions must continuously adapt to maintain relevance in an AI-enabled environment.

FUN-15: AI functions as an attention redirector, forcing focus away from content mastery toward critical thinking, creativity, and complex problem-solving.

FUN-16: It operates functionally as a democratizer of information access while simultaneously increasing the premium on interpretive capacity.

FUN-17: AI functions as a collaboration intensifier, making human-to-human interaction more valuable by handling routine individual tasks.

FUN-18: It creates functional pressure toward personalization by enabling customized content delivery while highlighting the irreplaceable value of human mentorship.

FUN-19: AI functions as a time liberator for educators by handling routine tasks while creating new demands for higher-order pedagogical design.

FUN-20: It operates as a functional differentiator, separating institutions that can adapt to human-AI collaboration from those trapped in purely human models.

FUN-21: AI functions as a skills-gap revealer, making visible which human capabilities remain essential in an automated environment.

FUN-22: It creates functional evolution pressure, forcing universities to continuously redefine their value proposition in relation to technological capabilities.

FUN-23: AI functions as a scale transformer, enabling massive personalization while requiring intimate human relationships for meaningful learning.

FUN-24: It operates functionally as a purpose clarifier, forcing institutions to articulate why human-centered education matters in an AI world.

FUN-25: AI functions as a strategic forcing mechanism, compelling universities to choose between efficiency optimization and human development as primary organizing principles.

Phase 2 (ESS): The Essential Inquiry – 20 iterations

ESS-1: Essentially, AI’s impact on higher education is the forced recognition that learning and credential production are separate processes.

ESS-2: At its essence, this is the collapse of the equation between work completion and learning achievement that has structured higher education for centuries.

ESS-3: The essential transformation is the shift from universities as knowledge gatekeepers to universities as wisdom cultivators.

ESS-4: Essentially, AI reveals that much of what universities called "education" was actually sophisticated information processing that machines can do better.

ESS-5: The essence is the emergence of human capability as the scarce resource in an environment of infinite information processing.

ESS-6: At its core, this is about the university’s transition from a content institution to a context institution.

ESS-7: Essentially, AI forces the recognition that educational value lies in the quality of questions rather than the efficiency of answers.

ESS-8: The essential change is the elevation of synthesis, judgment, and wisdom as the irreplaceable human contributions to knowledge work.

ESS-9: At its essence, this transformation reveals the university’s fundamental choice between being a utility or being a community.

ESS-10: The essential impact is the unbundling of educational functions that were artificially bundled for institutional convenience rather than learning effectiveness.

ESS-11: Essentially, AI transforms higher education from a standardized mass production system to a personalized capability development platform.

ESS-12: The essence is the recognition that human presence and relationship are not inefficiencies to be optimized but core technologies of learning.

ESS-13: At its core, this is about distinguishing between education as information transfer and education as consciousness transformation.

ESS-14: Essentially, AI forces universities to confront the difference between what they do and what they claim to do.

ESS-15: The essential insight is that AI enables educational authenticity by making artificial learning processes obviously artificial.

ESS-16: At its essence, this transformation is about the forced maturation of educational institutions from content monopolies to wisdom communities.

ESS-17: Essentially, AI reveals that the university’s unique value lies in its capacity to cultivate human consciousness rather than process information.

ESS-18: The essential change is the shift from education as individual knowledge acquisition to education as collective wisdom development.

ESS-19: At its core, this is about recognizing that learning requires the kinds of inefficient processes that resist optimization.

ESS-20: Essentially, AI’s impact is to force higher education to become what it was always meant to be: a community practice devoted to human consciousness development.

Phase 3 (DIA): The Dialectical Inquiry – 35 iterations

DIA-1: The fundamental dialectical tension: AI threatens to make human education obsolete while simultaneously making genuinely human education more necessary than ever.

DIA-2: Thesis: Universities are information processing institutions. Antithesis: AI processes information better than universities. Synthesis: Universities become consciousness cultivation institutions.

DIA-3: The dialectical contradiction: AI integration appears to enhance education while potentially destroying what is most educational about education.

DIA-4: Thesis: Educational efficiency is always good. Antithesis: Learning requires inefficient processes. Synthesis: AI handles efficiency while humans focus on productive inefficiency.

DIA-5: The dialectical tension between technological possibility and educational purpose resolves through complementarity rather than competition.

DIA-6: Thesis: Human presence in education. Antithesis: AI capability in education. Synthesis: Human-AI collaboration organized around human uniqueness.

DIA-7: The contradiction between personalization and standardization resolves through AI enabling mass customization while humans provide intimate relationship.

DIA-8: Thesis: Education as content delivery. Antithesis: Education as relationship formation. Synthesis: AI delivers content while humans cultivate consciousness.

DIA-9: The dialectical resolution: AI forces education to organize around what is irreducibly human rather than what is efficiently scalable.

DIA-10: Thesis: Traditional education methods. Antithesis: AI-enabled learning systems. Synthesis: Platform universities that integrate both according to learning objectives.

DIA-11: The fundamental contradiction between efficiency imperatives and transformation requirements resolves through functional differentiation.

DIA-12: Thesis: Universities as knowledge institutions. Antithesis: AI as superior knowledge processor. Synthesis: Universities as wisdom institutions.

DIA-13: The dialectical tension between individual learning and collective education resolves through AI enabling personalized pathways within community contexts.

DIA-14: Thesis: Assessment measures learning. Antithesis: AI makes traditional assessment meaningless. Synthesis: Assessment becomes growth documentation rather than performance measurement.

DIA-15: The contradiction between technological sophistication and educational authenticity resolves through AI handling sophistication while humans focus on authenticity.

DIA-16: Thesis: Faculty as content experts. Antithesis: AI as superior content access. Synthesis: Faculty as consciousness development facilitators.

DIA-17: The dialectical resolution involves AI becoming the infrastructure that enables rather than replaces human educational activities.

DIA-18: Thesis: Educational competition. Antithesis: Educational collaboration. Synthesis: AI enables collaborative learning while maintaining individual development focus.

DIA-19: The fundamental tension between efficiency and effectiveness resolves through AI optimizing efficiency while humans optimize effectiveness.

DIA-20: Thesis: Education as preparation for work. Antithesis: AI will do most work. Synthesis: Education as preparation for consciousness, creativity, and wisdom.

DIA-21: The dialectical contradiction between technological determinism and human agency resolves through conscious choice about technology integration.

DIA-22: Thesis: Standardized education. Antithesis: Personalized learning. Synthesis: AI enables personalization within structured educational frameworks.

DIA-23: The tension between preservation of educational tradition and innovation pressure resolves through AI preserving what is valuable while eliminating what is obsolete.

DIA-24: Thesis: Universities as elite institutions. Antithesis: AI as democratizing force. Synthesis: Universities as consciousness development communities accessible to all who seek transformation.

DIA-25: The dialectical resolution involves recognizing that AI and human education operate in complementary rather than competitive domains.

DIA-26: Thesis: Education as information transfer. Antithesis: Information is freely available. Synthesis: Education as wisdom cultivation through relationship and challenge.

DIA-27: The contradiction between technological capability and human limitation resolves through technology amplifying rather than replacing human uniqueness.

DIA-28: Thesis: Institutional efficiency. Antithesis: Learning inefficiency. Synthesis: Institutional platforms that support both efficient and inefficient processes appropriately.

DIA-29: The dialectical tension between global technological forces and local educational communities resolves through conscious integration that preserves community while leveraging technology.

DIA-30: Thesis: Education as individual achievement. Antithesis: Learning as collective process. Synthesis: AI supports individual development within community learning environments.

DIA-31: The fundamental contradiction between measurable outcomes and unmeasurable transformation resolves through AI handling measurement while humans focus on transformation.

DIA-32: Thesis: Educational scarcity. Antithesis: Information abundance. Synthesis: Attention and consciousness become the scarce resources requiring cultivation.

DIA-33: The dialectical resolution recognizes that AI forces explicit choice about educational values that were previously implicit and unexamined.

DIA-34: Thesis: Technology as tool. Antithesis: Technology as environment. Synthesis: Conscious integration of AI as environment that preserves human agency in educational purposes.

DIA-35: The ultimate dialectical synthesis: AI forces education to become authentically human by handling everything that is artificial about current educational approaches.

Phase 4 (EXP): The Experiential Inquiry – 15 iterations

EXP-1: Students experience the paradox of unprecedented access to information alongside growing uncertainty about how to develop wisdom.

EXP-2: Faculty experience the tension between technological possibility and pedagogical purpose, often feeling obsolete while recognizing their increased importance.

EXP-3: Administrators experience the pressure to adopt AI technologies while lacking frameworks for evaluating their educational impact.

EXP-4: Institutions experience the contradiction between efficiency demands and the inefficient processes that characterize transformational learning.

EXP-5: Society experiences anxiety about AI replacing human capabilities while simultaneously demanding more sophisticated human judgment and creativity.

EXP-6: Employers experience the gap between AI-capable information processing and the human capabilities they actually need in employees.

EXP-7: Students experience the paradox of having more access to information while feeling less confident in their ability to use it wisely.

EXP-8: Educators experience the challenge of maintaining relevance while embracing tools that can perform many of their traditional functions.

EXP-9: The administrative experience involves navigating between technological possibilities and institutional constraints.

EXP-10: Students experience AI as both academic aid and authenticity threat, creating confusion about legitimate learning processes.

EXP-11: Faculty experience the pressure to become learning experience designers rather than content deliverers, requiring new skill development.

EXP-12: Institutional experience involves balancing innovation pressure with community preservation in educational environments.

EXP-13: The collective experience is one of fundamental uncertainty about what education should become in an AI-enabled world.

EXP-14: All stakeholders experience the tension between embracing efficiency and preserving the inefficient processes that enable transformation.

EXP-15: The overall experiential impact is the recognition that AI forces explicit choices about educational values that were previously implicit.

Phase 5 (ARC): The Archetypal Inquiry – 20 iterations

ARC-1: AI in higher education embodies the archetype of the Tool that Becomes Master, forcing recognition of human agency in technological relationships.

ARC-2: The transformation represents the Wise Teacher archetype being challenged by the Efficient Tutor, forcing clarification of educational wisdom versus information transfer.

ARC-3: AI embodies the Prometheus archetype, bringing powerful capabilities to humans while creating unforeseen consequences for educational institutions.

ARC-4: The change represents the Library of Alexandria archetype meeting the Printing Press – massive information access transforming the nature of scholarship and learning.

ARC-5: AI embodies the Mirror archetype, reflecting back to universities which of their functions were mechanical versus genuinely educational.

ARC-6: The transformation represents the Apprentice archetype evolving, where AI becomes the master of routine tasks while humans master creativity and judgment.

ARC-7: AI embodies the Catalyst archetype, accelerating educational transformations that were already latent within university systems.

ARC-8: The change represents the Guardian at the Threshold archetype, where AI becomes the test that institutions must pass to remain relevant.

ARC-9: AI embodies the Amplifier archetype, making excellent education more excellent while making poor education obviously inadequate.

ARC-10: The transformation represents the Phoenix archetype, where universities must die to their old forms to be reborn in AI-integrated configurations.

ARC-11: AI embodies the Sorter archetype, separating educational wheat from chaff by revealing which activities create genuine value.

ARC-12: The change represents the Bridge archetype, connecting traditional educational values with technological capabilities in new synthesis.

ARC-13: AI embodies the Revealer archetype, making visible the hidden assumptions and inefficiencies in traditional educational models.

ARC-14: The transformation represents the Great Leveler archetype, democratizing access to information while creating new hierarchies of wisdom and judgment.

ARC-15: AI embodies the Collaborator archetype, requiring humans to redefine their role in partnership rather than competition with intelligent systems.

ARC-16: The change represents the Efficiency God archetype demanding optimization while the Education Spirit insists on inefficient processes of growth.

ARC-17: AI embodies the Time Lord archetype, compressing temporal cycles of information processing while expanding time available for contemplation.

ARC-18: The transformation represents the Platform Builder archetype, creating infrastructure for multiple educational approaches rather than single institutional models.

ARC-19: AI embodies the Question Generator archetype, providing infinite answers while making the quality of questions more important than ever.

ARC-20: The overall archetypal pattern is the Great Separation, where AI handles mechanical intelligence while humans focus on consciousness, wisdom, and transformational relationship.

Phase 6 (AXI): The Axiomatic Inquiry – 30 iterations

AXI-1: The fundamental axiom emerging: In an AI world, educational value shifts from what students know to how they think and who they become.

AXI-2: Core principle: AI forces the recognition that information mastery is not learning, and learning is not education.

AXI-3: Governing axiom: The irreplaceable human contribution to education is consciousness transformation, not content transmission.

AXI-4: Essential principle: AI enables efficiency in mechanical processes precisely to create space for inefficient processes of wisdom development.

AXI-5: Foundational axiom: Educational institutions survive by maximizing human uniqueness rather than competing with artificial intelligence.

AXI-6: Core principle: AI transforms universities from knowledge monopolies to wisdom cultivation communities.

AXI-7: Governing axiom: The value of human presence in education is inversely related to the availability of artificial intelligence.

AXI-8: Essential principle: AI forces educational authenticity by making artificial learning processes obviously artificial.

AXI-9: Foundational axiom: In an AI world, the quality of questions matters more than the speed of answers.

AXI-10: Core principle: AI enables mass personalization while making intimate human relationship more valuable than ever.

AXI-11: Governing axiom: Educational transformation requires embracing AI for what it does best while intensifying human focus on what humans do uniquely.

AXI-12: Essential principle: AI forces the separation of education as information transfer from education as consciousness development.

AXI-13: Foundational axiom: The university’s survival depends on becoming more human in response to artificial intelligence, not more efficient.

AXI-14: Core principle: AI creates educational abundance in information and artificial scarcity in wisdom, making wisdom cultivation the key differentiator.

AXI-15: Governing axiom: Educational institutions must organize around human development rather than content delivery to remain relevant in an AI world.

AXI-16: Essential principle: AI forces explicit choice between education as utility (easily automated) and education as transformation (requiring human presence).

AXI-17: Foundational axiom: The complementarity between artificial intelligence and human consciousness becomes the organizing principle of future education.

AXI-18: Core principle: AI enables educational efficiency precisely to protect educational inefficiency where inefficiency is essential for learning.

AXI-19: Governing axiom: In an AI world, educational success is measured by human capability development rather than information processing achievement.

AXI-20: Essential principle: AI forces universities to choose between optimizing for measurement and optimizing for transformation.

AXI-21: Foundational axiom: The irreducible value of human education lies in consciousness cultivation that cannot be automated.

AXI-22: Core principle: AI enables the separation of education as credentialing from education as human development.

AXI-23: Governing axiom: Educational institutions must become consciousness cultivation communities to justify their existence in an AI world.

AXI-24: Essential principle: AI forces recognition that relationship and community are technologies of learning, not inefficiencies to be eliminated.

AXI-25: Foundational axiom: The university’s unique contribution is the cultivation of wisdom through relationship, challenge, and community.

AXI-26: Core principle: AI makes visible the distinction between mechanical intelligence and conscious intelligence, requiring educational reorganization around this difference.

AXI-27: Governing axiom: Educational authenticity requires organizing around what humans uniquely contribute rather than what machines can do better.

AXI-28: Essential principle: AI enables educational institutions to focus on their irreplaceable function: consciousness transformation through human relationship.

AXI-29: Foundational axiom: The future of education lies in conscious integration of AI capability with human consciousness development.

AXI-30: Ultimate principle: AI forces education to become authentically human by making everything else artificial.

Phase 7 (BOU): The Boundary Inquiry – 15 iterations

BOU-1: The critical boundary emerges between mechanical intelligence (AI-capable) and conscious intelligence (human-unique).

BOU-2: AI forces the boundary between information processing and wisdom development to become explicit and organizationally significant.

BOU-3: The boundary between efficiency and effectiveness becomes crucial as AI optimizes the former while humans focus on the latter.

BOU-4: Educational institutions must establish boundaries between what should be automated and what must remain human-centered.

BOU-5: The boundary between individual learning and collective education requires conscious management in AI-integrated environments.

BOU-6: AI creates necessary boundaries between transactional education (easily automated) and transformational education (requiring relationship).

BOU-7: The boundary between content delivery and consciousness cultivation becomes the organizing principle of educational reform.

BOU-8: Institutions must establish boundaries between utility functions (AI-enhanced) and community functions (human-intensive).

BOU-9: The boundary between standardization and personalization requires sophisticated management through platform approaches.

BOU-10: AI forces boundaries between what can be measured and what can only be witnessed in educational assessment.

BOU-11: The boundary between technological sophistication and educational authenticity must be consciously maintained.

BOU-12: Educational institutions require boundaries between innovation pressure and community preservation.

BOU-13: The boundary between efficiency optimization and transformation cultivation becomes organizationally critical.

BOU-14: AI necessitates boundaries between information abundance and attention scarcity in educational design.

BOU-15: The fundamental boundary is between education as utility and education as consciousness development community.

Phase 8 (CRI): The Critical Inquiry – 20 iterations

CRI-1: AI implementation in education may serve corporate interests in data extraction and market penetration rather than genuine learning improvement.

CRI-2: The efficiency rhetoric around AI integration often conceals cost-cutting imperatives that reduce educational quality while claiming enhancement.

CRI-3: AI-driven personalization may actually represent sophisticated manipulation techniques that shape student behavior rather than support learning autonomy.

CRI-4: The emphasis on AI skills and digital literacy may serve economic system needs while diverting attention from critical thinking and consciousness development.

CRI-5: AI integration discourse may function as ideological cover for the corporatization of higher education through technological dependence.

CRI-6: The promise of AI democratization may actually increase educational inequality by creating new forms of technological stratification.

CRI-7: AI implementation often serves administrative convenience and control rather than pedagogical improvement or student development.

CRI-8: The rhetoric of innovation and future-readiness may pressure institutions into adopting technologies that undermine their educational mission.

CRI-9: AI integration may serve surveillance and behavioral modification purposes that conflict with educational autonomy and critical thinking development.

CRI-10: The emphasis on efficiency and measurement may systematically eliminate the unmeasurable aspects of education that are most valuable for human development.

CRI-11: AI adoption may create vendor dependencies that compromise institutional autonomy and educational decision-making.

CRI-12: The focus on technological solutions may divert attention from structural problems in higher education that require systemic rather than technological solutions.

CRI-13: AI integration may serve to deskill educators while claiming to enhance their capabilities, reducing professional autonomy and judgment.

CRI-14: The promise of personalization may mask standardization processes that reduce educational diversity while claiming to support individual differences.

CRI-15: AI implementation may prioritize technological sophistication over pedagogical wisdom, leading to educational approaches that are technically advanced but educationally impoverished.

CRI-16: The efficiency imperative may systematically eliminate the inefficient processes that are essential for consciousness transformation and wisdom development.

CRI-17: AI integration discourse may function to make technological adoption appear inevitable while concealing that it represents particular choices serving specific interests.

CRI-18: The emphasis on AI collaboration may actually reduce human agency and critical thinking while claiming to enhance human capability.